Hi all

As follow up of my previous topic related R Markdown, I would like to make a kind of stand-alone Shiny app. Not the big deal with servers and so on.

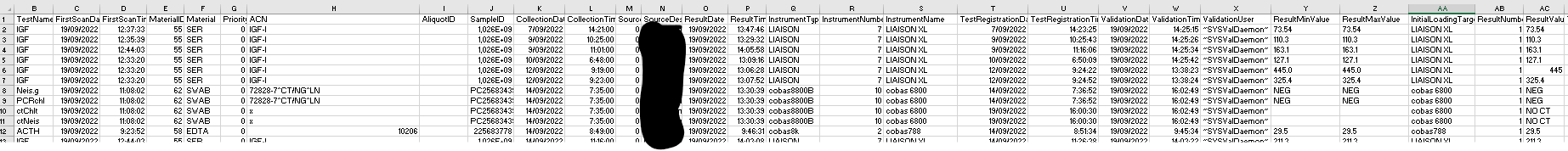

The goal is to import the txt file with the data, to remove the NA and rows with empty values...

I use the code:

#First the exported file from the Infinity server needs to be in the correct folder and read in:

setwd("G:/My Drive/Traineeship Advanced Bachelor of Bioinformatics 2022/Internship 2022-2023/internship documents/")

server_data <- read.delim(file="PostCheckAnalysisRoche.txt", header = TRUE, na.strings=c(""," ","NA"))

#Create a subset of the data to remove/exclude the unnecessary columns:

subset_data_server <- server_data[,c(-5:-7,-9,-17,-25:-31)]

#Remove the rows with blank/NA values:

df1 <- na.omit(subset_data_server)

#The difference between the ResultTime and the FirstScanTime is the turn around time:

df1$TS_start <- paste(df1$FirstScanDate, df1$FirstScanTime)

df1$TS_end <- paste(df1$ResultDate, df1$ResultTime)

df1$TAT <- difftime(df1$TS_end,df1$TS_start,units = "mins")

On my R Markdown document I works good. I hope this give the same result on the Shiny app. Now the data is kind of prepared I would like to make a histogram/barplot to give the amount of samples over time (1 hour interval) and to use a dropdown menu to select an extra filter. eg: show the histogram, representing the amount of sample each hour for $Source(location) A. Another example is show the histogram, representing the amount of sample each hour of $test IGF...

I used following code to make a barplot of the amount of samples each hour of all the samples in r markdown:

par(mar = c(4, 4, .1, .1))

ggplot(data = df_aggr_First_scan,

mapping = aes(x = df_aggr_First_scan$hour, y = df_aggr_First_scan$`amount of samples`)) +

geom_bar(stat="identity", boundary = 0, color="blue", fill="lightblue") +

labs(title="amount of first-scan times over the day",x="hours", y = "amount of samples") +

theme(plot.title = element_text(hjust = 0.5)) +

scale_x_continuous(limits = c(-0.5, 24), breaks = seq(0, 23, 1))

ggplot(data = df_aggr_Result,

mapping = aes(x = df_aggr_Result$hour, y = df_aggr_Result$`amount of samples`)) +

geom_bar(stat="identity", boundary = 0, color="blue", fill="lightblue") +

labs(title="amount of results over the day",x="hours", y = "amount of samples")+

theme(plot.title = element_text(hjust = 0.5)) +

scale_x_continuous(limits = c(-0.5, 24), breaks = seq(0, 23, 1))

But now before making the histogram, I should create a filter on the data , based on the selectInput variable. I tried already some codes, but the aggregate seems the give an error, since It cannot find any rows?

library(lubridate)

selectInput("Validation source", label="Who/What performed the validation:",

choices = df1$ValidationUser, selected = "~SYSValDaemon~")

Input <- selectInput

df1_filtered <- df1[df1$ValidationUser == 'selectInput']

#Calculate the amount of Result-samples each hour:

df2 <- as.data.frame(hour(hms(df1_filtered$ResultTime)))

df_aggr_Result <- aggregate(df2, by=list(df2$`hour(hms(df1$ResultTime))`), FUN = length)

#Renaming

names(df_aggr_Result)[names(df_aggr_Result) == "Group.1"] <- "hour"

names(df_aggr_Result)[names(df_aggr_Result) == "hour(hms(df1$ResultTime))"] <- "amount of samples"

renderPlot({

barplot(df_aggr_Result, probability = TRUE,

breaks = seq(0, 23, 1),

xlab = "hour",

main = "amount of samples")

})

It is still a draft script and there is room for (esthetical) improvements. Since it is not really possible to run code chunks (with dynamic renderplots) separately , it takes a lot of time to debug the script. An extra addition could be that first there are radiobuttons on top to chose which colum you would like to filter on (eg $test), under it a drop down menu to chose the value of the filter and under that the histogram.

Hope you can help!

Thanks a lot!