I am using the noctua package to send data to AWS S3 using DBI interface. E.g.

dbWriteTable(conn = con_s3,

name = paste0("revenue_predictions.", game_name),

value = cohort_data,

append = T,

overwrite = F,

file.type = "json",

partition = c(year = yr, month = mt, day = d),

s3.location = "s3://ourco-emr/tables/revenue_predictions.db", # not our real name :)

max.batch = 100000)

This works, my dataframe cohort_data is sent to S3.

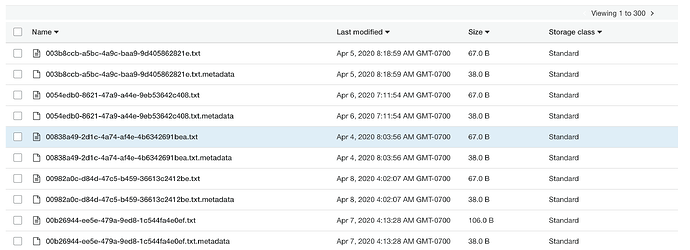

Noctua appears to update the Athena meta store with txt files so that when I login to S3, I see files of the form:

Since we use the hive meta store and not the Athena meta store, these are not needed since I add partitions with a separate hive_con.

These files exist in the same directory as my table paste0("revenue_predictions.", game_name). I tried deleting all of the .txt meta files and left just the directory with the json and my queries still run, so these meta store txt files are not needed as far as I can tell.

Assuming it is noctua attempting to update the Athena meta store that creates all of these files, is there a way to prevent noctua::dbWriteTable() from creating these?