Hi, everyone!

I was trying pytorch with gpu in R. The problem is: first, I tried direct in python and the follow code works:

import torch

dtype = torch.float

#device = torch.device("cpu")

device = torch.device("cuda:0") # Uncomment this to run on GPU

torch.randn(4, 4, device=device, dtype=dtype)

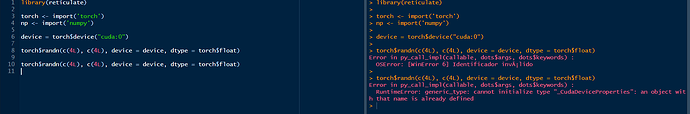

However, I got problems to run the same code in R with reticulate:

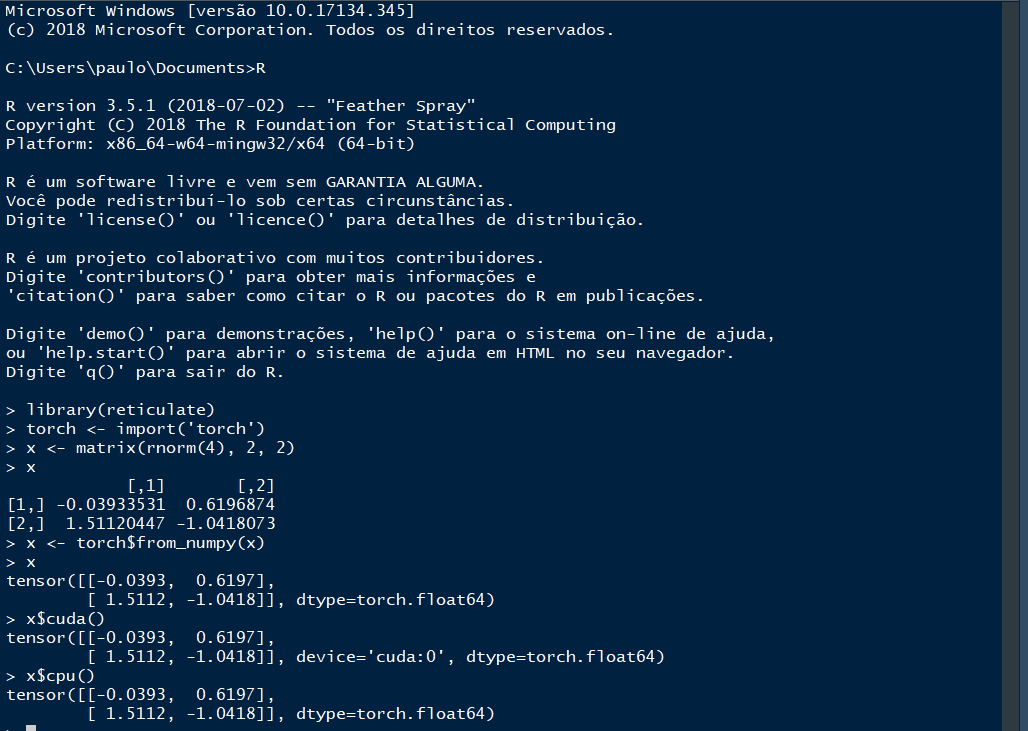

But, I got something more interesting. When a I did a reprex with this code, It simply worked:

library(reticulate)

torch <- import('torch')

np <- import('numpy')

device = torch$device("cuda:0")

torch$randn(c(4L), c(4L), device = device, dtype = torch$float)

#> tensor([[-0.5082, -0.0566, 0.3794, -0.6489],

#> [ 1.4504, 0.4627, 1.5426, -0.0883],

#> [ 0.4226, -0.7120, -0.7997, 0.1578],

#> [ 0.1594, 1.5402, 0.9680, -1.0612]], device='cuda:0')

torch$randn(c(4L), c(4L), device = device, dtype = torch$float)

#> tensor([[-0.2950, 1.3037, 0.6723, -1.9531],

#> [-0.7189, 0.4939, -0.0259, -0.0818],

#> [ 0.8678, -0.3601, -0.3294, -1.7991],

#> [ 0.8432, 0.1208, 0.7282, 1.3152]], device='cuda:0')

Created on 2018-10-20 by the reprex package (v0.2.1)

Any help for this? Thanks!

I'm using RStudio 1.2.97 and:

sessionInfo()

#> R version 3.5.1 (2018-07-02)

#> Platform: x86_64-w64-mingw32/x64 (64-bit)

#> Running under: Windows 10 x64 (build 17134)

#>

#> Matrix products: default

#>

#> locale:

#> [1] LC_COLLATE=Portuguese_Brazil.1252 LC_CTYPE=Portuguese_Brazil.1252

#> [3] LC_MONETARY=Portuguese_Brazil.1252 LC_NUMERIC=C

#> [5] LC_TIME=Portuguese_Brazil.1252

#>

#> attached base packages:

#> [1] stats graphics grDevices utils datasets methods base

#>

#> loaded via a namespace (and not attached):

#> [1] compiler_3.5.1 backports_1.1.2 magrittr_1.5 rprojroot_1.3-2

#> [5] tools_3.5.1 htmltools_0.3.6 yaml_2.2.0 Rcpp_0.12.18

#> [9] stringi_1.2.4 rmarkdown_1.10 knitr_1.20 stringr_1.3.1

#> [13] digest_0.6.16 evaluate_0.11

Created on 2018-10-20 by the reprex package (v0.2.1)

). It is less than 2 years old. But I complete agree with you it is probably an environment issue.

). It is less than 2 years old. But I complete agree with you it is probably an environment issue.