Thanks for making the issue more concrete for me, @andrewH.

I'll begin with the Gospel of @hadley in #RForDataScientists, using the via negativa in R for Data Science

Before we continue on to other topics, it’s worth talking briefly about non-tidy data. Earlier in the chapter, I used the pejorative term “messy” to refer to non-tidy data. That’s an oversimplification: there are lots of useful and well-founded data structures that are not tidy data. There are two main reasons to use other data structures:

Alternative representations may have substantial performance or space advantages.

Specialised fields have evolved their own conventions for storing data that may be quite different to the conventions of tidy data.

Either of these reasons means you’ll need something other than a tibble (or data frame). If your data does fit naturally into a rectangular structure composed of observations and variables, I think tidy data should be your default choice. But there are good reasons to use other structures; tidy data is not the only way.

If you’d like to learn more about non-tidy data, I’d highly recommend this thoughtful blog post by Jeff Leek

Taking the Nicosian Creed approach of what does constitute tidy, from the same Gospel

There are three interrelated rules which make a dataset tidy:

Each variable must have its own column.

Each observation must have its own row.

Each value must have its own cell.

The key definition (forgive me my sins, for I came here originally from the law) is observation, which I can't find in the online version

An observation, or a case, is a set [emphasis added] of measurements under similar conditions (you usually make all of the measures in an observation at the same time [emphasis added] and on the same object [emphasis added]. An observation will contain several values, each associated with a different variable ... [or] a data point.

Therefore, I think, there is no inconsistency between "tidy" and `a rectangular array, with the first value probably a date, an observation number, or some other unique identifier, and the other variables in columns'.

As to whether this raises an obstacle to ggplot, in particular, it seems a non-issue.

Taking your example.

library(tibble)

set.seed(137)

yr. <- 1990:1995

nm. <- runif(6)

tx. <- letters[1:6]

fc. <- paste0(tx., round(nm. * 100, 0))

tb_wide <- tibble(year. = yr., num. = nm., txt. = tx., fact. = fc.) # , key = year., index = year.)

tb_wide

#> # A tibble: 6 x 4

#> year. num. txt. fact.

#> <int> <dbl> <chr> <chr>

#> 1 1990 0.649 a a65

#> 2 1991 0.413 b b41

#> 3 1992 0.914 c c91

#> 4 1993 0.764 d d76

#> 5 1994 0.365 e e36

#> 6 1995 0.958 f f96

Created on 2019-11-14 by the reprex package (v0.3.0)

qualifies as tidy.

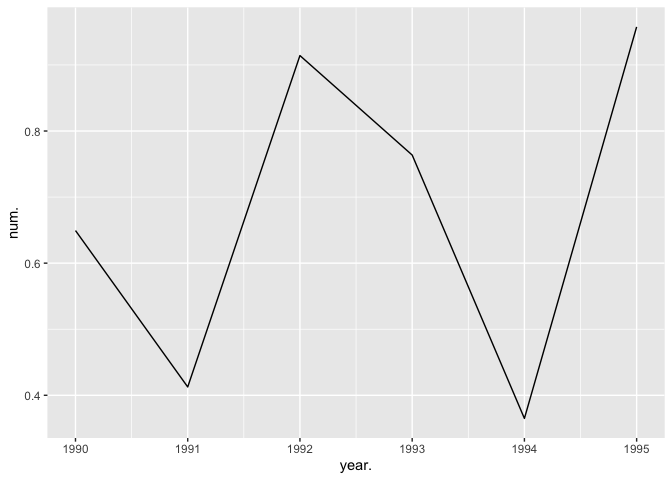

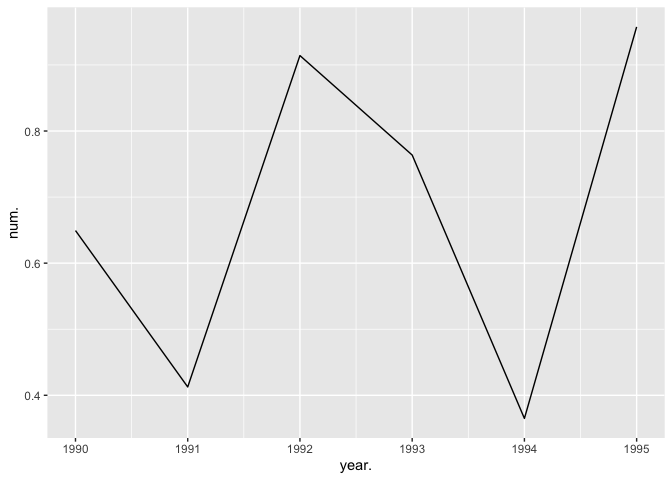

To apply the ggplot grammar of graphics to tb_wide is not problematic due to layers., appropriate care being taken not to mix continuous and discrete variables without further intervention.

library(ggplot2)

library(tibble)

set.seed(137)

yr. <- 1990:1995

nm. <- runif(6)

tx. <- letters[1:6]

fc. <- paste0(tx., round(nm. * 100, 0))

tb_wide <- tibble(year. = yr., num. = nm., txt. = tx., fact. = fc.) # , key = year., index = year.)

tb_wide

#> # A tibble: 6 x 4

#> year. num. txt. fact.

#> <int> <dbl> <chr> <chr>

#> 1 1990 0.649 a a65

#> 2 1991 0.413 b b41

#> 3 1992 0.914 c c91

#> 4 1993 0.764 d d76

#> 5 1994 0.365 e e36

#> 6 1995 0.958 f f96

p <- ggplot(data = tb_wide, aes(x = year.)) + geom_line(aes(y = num.))

p

Created on 2019-11-14 by the reprex package (v0.3.0)

As for tstibble, I don't see why the class changes anything. The underlying problem is the mix of num, txt and fact objects, of which the second and third cannot be property coerced to num.

The approach, when coercion is unavailable would seem to be facet or glob.

As always, I may be wrongheaded, but does this advance the question?