I'd love some. It's not a very important project–I'm just doing it out of personal interest but I'd love to see, where I am going wrong, is the doc is that messed up, or both. From my experience yesterday, it looks like a lot of my current trouble is just inconsistent data input but I'm not seeing the problems. It is also obvious that the staff at the Office of the Conflict of Interest and Ethics Commissioner don't no anything about data analysis.

Also would you have any idea why a straight read_html() is giving me an erre while @ dromano reports no problem?

Some of the rest of the data is so badly arranged ---see the Nature of Benefits and Amount columns that it's probably not worth trying to do anything with them. If it was a serious project, I'd re-key everything into something like a decent, tidy data set.

Anyway, if you run my earlier code you should end up with the initial data set.

I am primarily interested in which MPs went where and what organizations were funding the trips.

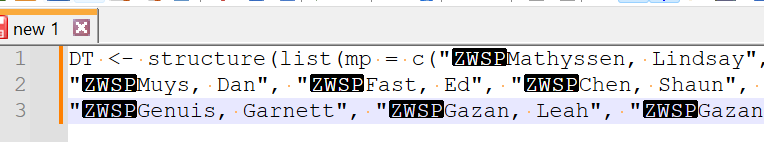

I pulled out out these columns and my tables were not making sense. What looked like the same MP's name or Sponsor name was appearing repeatedly.

I finally opened the file in a spreadsheet and started checking spelling and spacing in the Sponsor column. I managed to reduce but but not totally reduce the duplication. So far I am not having any luck with MP's names.

In any case , my somewhat cleaned-up data set is below. The code below shows the duplications I am getting in my tables.

Thanks

suppressMessages(library(data.table))

suppressMessages((library(flextable)))

DT1 <- DT[, .N, by = sponsor]

TB1 <- flextable(DT1)

TB1 <- set_header_labels(

x = TB1 ,

values = c(

sponsor = "Sponsor",

N = "Count")

)

set_table_properties(TB1, layout = "autofit")

DT2 <- DT[, .N, by = mp]

TB2 <- flextable(DT)

TB2 <- set_header_labels(

x = TB2,

values = c(

sponsor = "MP",

N = "Count")

)

set_table_properties(TB2, layout = "autofit")

Data

DT <- structure(list(mp = c("Mathyssen, Lindsay", "Lewis, Leslyn",

"Muys, Dan", "Fast, Ed", "Chen, Shaun", "Falk, Rosemarie",

"Genuis, Garnett", "Gazan, Leah", "Gazan, Leah", "Falk, Rosemarie",

"Ellis, Stephen", "Lawrence, Philip", "Stubbs, Shannon",

"Aboultaif, Ziad", "Patzer, Jeremy", "Williamson, John",

"Lake, Mike", "Bergeron, Stéphane", "McLeod, Michael",

"Gallant, Cheryl", "Coteau, Michael", " Genuis, Garnett",

"Genuis, Garnett", "Sgro, Judy", "Boulerice, Alexandre",

"McPherson, Heather", "Cooper, Michael", "Lattanzio, Patricia",

"Sgro, Judy", "Sgro, Judy", "Rota, Anthony", "Arya, Chandra",

"Bergeron, Stéphane", "Kmiec, Tom", "Sinclair-Desgagné, Nathalie",

"Lewis, Chris", "Kayabaga, Arielle", "Kayabaga, Arielle",

"Aitchison, Scott", "Bradford, Valerie", "Gaheer, Iqwinder",

"Melillo, Eric", "Gallant, Cheryl", "Lake, Mike", "Arya, Chandra",

"Arya, Chandra", "Barrett, Michael", "Bergeron, Stéphane",

"Bezan, James", "Chong, Michael", "Cooper, Michael",

"Dancho, Raquel", "Gaudreau, Marie-Hélène", "Genuis, Garnett",

"Gill, Marilène", "Hardie, Ken", "Lantsman, Melissa",

"Mathyssen, Lindsay", "McKay, John", "McPherson, Heather",

"Sarai, Randeep", "Seeback, Kyle", "Martel, Richard",

"Schiefke, Peter", "Aitchison, Scott", "Berthold, Luc",

"Blanchette-Joncas, Maxime", "Bradford, Valerie", "Chambers, Adam",

"Champoux, Martin", "Chahal, Harnirjodh (George)", "Chen, Shaun",

"Findlay, Kerry-Lynne", "Fortin, Rhéal", "Goodridge, Laila",

"Hallan, Jasraj Singh", "Hepfner, Lisa", "Kramp-Neuman, Shelby",

"Lapointe, Viviane", "Paul-Hus, Pierre", "Scheer, Andrew",

"Shanahan, Brenda", "Blois, Kody", "Blanchet, Yves-François",

"Housefather, Anthony", "Rempel Garner, Michelle", "Arya, Chandra",

"Ehsassi, Ali", "Hoback, Randy", "Ashton, Niki", "Arya, Chandra",

"Brunelle-Duceppe, Alexis"), sponsor = c("Ahmadiyya Muslim Jama'at",

"Alliance for Responsible Citizenship (ARC)", "Belent Mathew",

"Canada-DPRK Knowledge Partnership Program", "Canadian Foodgrains Bank",

"Canadian Foodgrains Bank", "Canadian Foodgrains Bank",

"Canadian Union of Postal Workers", "Canadian Union of Public Employees",

"Canadians for Affordable Energy", "Canadians for Affordable Energy (Dan McTeague)",

"Canadians for Affordable Energy (Dan McTeague)", "Canadians for Affordable Energy (Dan McTeague)",

"Central Election Commission of the Republic of Uzbekistan",

"Church of God Ministries", "Danube Institute", "Education Cannot Wait",

"Federal Government of Germany", "Government of Northwest Territories",

"Government of Taiwan", "Indigenous Sport and Wellness Ontario",

"Inter-Parliamentary Alliance on China (IPAC)", "Inter-Parliamentary Alliance on China (IPAC)",

"Inter-Parliamentary Alliance on China (IPAC)", "International Association of Machinists & Aerospace Workers",

"International Campaign to Abolish Nuclear Weapons", "Iran Democratic Association",

"Iran Democratic Association", "Iran Democratic Association",

"Iran Democratic Association", "Italian Ministry of Foreign Affairs",

"Kurdistan Regional Government", "Kurdistan Regional Government",

"Kurdistan Regional Government", "Kurdistan Regional Government",

"One Free World International", "One Young World", "Open Society Foundations, Unitas Communications",

"Results Canada", "Results Canada", "Results Canada",

"Results Canada", "Saab Canada Inc.", "Special Olympics International",

"State Committee on work with Diaspora of The Republic of Azerbaijan",

"Taipei Economic and Cultural Office in Canada", "Taipei Economic and Cultural Office in Canada",

"Taipei Economic and Cultural Office in Canada", "Taipei Economic and Cultural Office in Canada",

"Taipei Economic and Cultural Office in Canada", "Taipei Economic and Cultural Office in Canada",

"Taipei Economic and Cultural Office in Canada", "Taipei Economic and Cultural Office in Canada",

"Taipei Economic and Cultural Office in Canada", "Taipei Economic and Cultural Office in Canada",

"Taipei Economic and Cultural Office in Canada", "Taipei Economic and Cultural Office in Canada",

"Taipei Economic and Cultural Office in Canada", "Taipei Economic and Cultural Office in Canada",

"Taipei Economic and Cultural Office in Canada", "Taipei Economic and Cultural Office in Canada",

"Taipei Economic and Cultural Office in Canada", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Centre for Israel and Jewish Affairs (CIJA)", "The Centre for Israel and Jewish Affairs (CIJA)",

"The Greens/EFA in the European Parliament", "UJA Federation of Greater Toronto",

"UJA Federation of Greater Toronto", "University of British Columbia Knowledge Partnership Program",

"University of British Columbia Knowledge Partnership Program",

"University of British Columbia Knowledge Partnership Program",

"World Hellenic Inter-Parliamentary Association", "World Hindu Foundation",

"World Uyghur Congress"), counts = c(1L, 1L, NA, NA, NA, NA,

NA, NA, NA, 1L, 2L, 3L, 4L, 1L, NA, NA, NA, NA, NA, NA, NA, 1L,

2L, 3L, NA, NA, 1L, 2L, 3L, 4L, NA, 1L, 2L, 3L, 4L, NA, NA, NA,

NA, NA, NA, NA, NA, NA, NA, 1L, 2L, 3L, 4L, 5L, 6L, 7L, 8L, 9L,

10L, 11L, 12L, 13L, 14L, 15L, 16L, 17L, 1L, 2L, 3L, 4L, 5L, 6L,

7L, 8L, 9L, 10L, 11L, 12L, 13L, 14L, 15L, 16L, 17L, 18L, 19L,

20L, 21L, 1L, NA, NA, NA, NA, NA, NA, NA, NA), dest = c("United Kingdom",

"London, England", "Kerala, India", "Seoul, South Korea",

"Kenya", "Kenya", "Kenya", "Toronto, Ontario, Canada",

"Vancouver, British Columbia, Canada", "London, England",

"London, England", "London, England", "London, England",

"Tashkent,Uzbekistan", "Tampa, Florida", "London, England",

"Geneva, Switzerland", "Munich, Germany", "Yellowknife, Fort Smith and Hay River, Northwest Territories, Canada",

"Taipei, Taiwan", "Halifax, Nova Scotia, Canada", "Tokyo, Japan",

"Prague, Czech Republic", "Prague, Czech Republic", "Hollywood, Maryland",

"Tokyo and Hiroshima, Japan", "Paris, France", "Paris, France",

"Brussels, Belguim", "Paris, France", "Pizzo Calabro, Tropea, Catanzaro, Cosenza, Sila, Morano Calabro, Pedace and Pietrafitta, Italy",

"Kurdistan Region, Iraq", "Erbil, Kurdistan Region of Iraq",

"Region of Kurdistan, Iraq (Erbil, Slemani, Duhok)", "Erbil, Kurdistan Region of Iraq",

"Iraq", "Belfast, Ireland", "San Francisco, California",

"Kenya", "Kenya", "Kenya", "Kenya", "Karlskrona, Sweden",

"Berlin, Germany", "Baku, Azerbaijan", "Taiwan", "Taiwan",

"Taiwan", "Taipei, Taiwan", "Taipei, Taiwan", "Taipei, Taichung and Nantou, Taiwan",

"Taiwan", "Taiwan", "Taiwan", "Taipei, Taiwan", "Taiwan",

"Taipei, Taiwan", "Taiwan", "Taipei, Taiwan", "Taipei, Taiwan",

"Taipei, Taiwan", "Taipei, Taiwan", "Israel", "Israel",

"Israel", "Tel Aviv, Israel", "Israel", "Israel",

"Jerusalem, Tel Aviv and Golan Heights, Israel; Ramallah, Palestine",

"Israel", "Israel", "Israel", "Israel", "Israel",

"Jerusalem, Tel Aviv and Golan Heights, Israel; Ramallah, Palestine",

"Israel", "Israel", "Israel", "Tel Aviv, Israel",

"Israel", "Jerusalem, Tel Aviv, Golan Heights, Israel; Ramallah, Palestine",

"Israel", "Israel and Palestinian territories", "Barcelona, Spain",

"Israel", "Israel", "Seoul, South Korea", "Republic of Korea",

"South Korea", "Kalamata and Athens, Greece", "Bangkok, Thailand",

"Tokyo, Japan")), class = "data.frame", row.names = c(NA,

-92L))