First, load the page and take a look at what you've got:

library(rvest)

url <- "http://saih.chminosil.es/index.php?url=/datos/resumenPluviometria"

html <- read_html(url)

html %>% html_text()

html %>% html_nodes("*") %>% html_attr("class") %>% unique()

If you run this, you'll see that the data is not returned corretly. This can be caused by some bot-defense. In this case, however, there is something wrong entirely.

Starting a web session gives

session("http://saih.chminosil.es/index.php?url=/datos/resumenPluviometria")

<session> http://saih.chminosil.es/index.php?url=/datos/mapas/mapa:H1/area:HID/acc:

Status: 200

Type: text/html

Size: 45929

Your link "http://saih.chminosil.es/index.php?url=/datos/resumenPluviometria" actually sends the session to "http://saih.chminosil.es/index.php?url=/datos/mapas/mapa:H1/area:HID/acc:". Thus, you first need to navigate to the resumenPluviometria page and then scrape it.

homepage <- session("http://saih.chminosil.es/index.php?url=/datos/mapas/mapa:H1/area:HID/acc:")

resumenPluviometria <- homepage %>% session_follow_link(xpath = "/html/body/div/div[2]/div[1]/div/div[2]/ul/li[1]/div/div[2]/div/div[1]/ul/li[3]/a")

# Navigating to index.php?url=/datos/resumenPluviometria

html <- resumenPluviometria %>% read_html()

table <- html %>% html_table()

print(table)

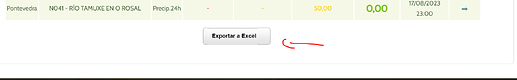

# A tibble: 279 x 9

Provincia Estación Señal `Umbral Alerta (mm)` Umbral Prealerta (mm~1

<chr> <chr> <chr> <chr> <chr>

1 León E003 - LAS ROZAS Prec~ 60,00 30,00

2 León E003 - LAS ROZAS Prec~ 120,00 80,00

3 León E003 - LAS ROZAS Prec~ - -

4 León E005 - MATALAVILLA Prec~ 60,00 30,00

5 León E005 - MATALAVILLA Prec~ 120,00 80,00

6 León E005 - MATALAVILLA Prec~ - -

7 León P008 - COLINAS DEL CAMPO Prec~ 60,00 30,00

8 León P008 - COLINAS DEL CAMPO Prec~ 120,00 80,00

9 León P008 - COLINAS DEL CAMPO Prec~ - -

10 León N036 - RIO TREMOR EN ALMAG~ Prec~ 60,00 30,00

# i 269 more rows

# i abbreviated name: 1: `Umbral Prealerta (mm)`

# i 4 more variables: `Umbral Activación (mm)` <chr>, `Valor actual (mm)` <chr>,

# Fecha <chr>, Tendencia <lgl>

# i Use `print(n = ...)` to see more rows