My model was tuned and seems to work well, but now I'm not sure how to save it and re-apply it.

Min reprex: (made following this tutorial to generate and tune the model, and this article on saving the model with {yaml} and {tidypredict}, using iris data.).

library(tidymodels)

library(tidypredict)

library(yaml)

tidymodels_prefer()

#Initial split, generate training and testing

mysplit <- initial_split(iris %>% select(-Species), strata=Petal.Width)

training_set <- training(mysplit)

test_set <- testing(mysplit)

#Set up the model specification

#The hyperparameters will be tuned

xgb_spec <- boost_tree(

trees = 1000,

tree_depth = tune(),

min_n = tune(),

loss_reduction = tune(),

sample_size = tune(),

mtry = tune(),

learn_rate = tune()

) %>%

set_engine("xgboost") %>%

set_mode("regression")

#Set up a space-filling grid design to cover the hyperparameter space as well as possible

xgb_grid <- grid_latin_hypercube(

tree_depth(),

min_n(),

loss_reduction(),

sample_size = sample_prop(),

finalize(mtry(), training_set), #gets treated differently b/c it depends on actual # of predictors in data

learn_rate(),

size = 30

)

#Put the model specification into a workflow

xgb_wf <- workflow() %>%

add_formula(Petal.Width ~.) %>%

add_model(xgb_spec)

#Create cross-validation resamples for tuning the model

input_folds <- vfold_cv(training_set, strata=Petal.Width)

#Use tunable workflow to tune

doParallel::registerDoParallel()

xgb_res <- tune_grid(

xgb_wf,

resamples = input_folds,

grid = xgb_grid,

control = control_grid(save_pred = TRUE)

)

#Select the best parameters based on RMSE

best_rmse <- select_best(xgb_res, "rmse")

#Finalize the tuneable workflow using the best parameters

final_xgb <- finalize_workflow(

xgb_wf,

best_rmse

)

#############

#Fit the final best model to training set and evaluate the test set

final_res <- last_fit(final_xgb, mysplit)

#############

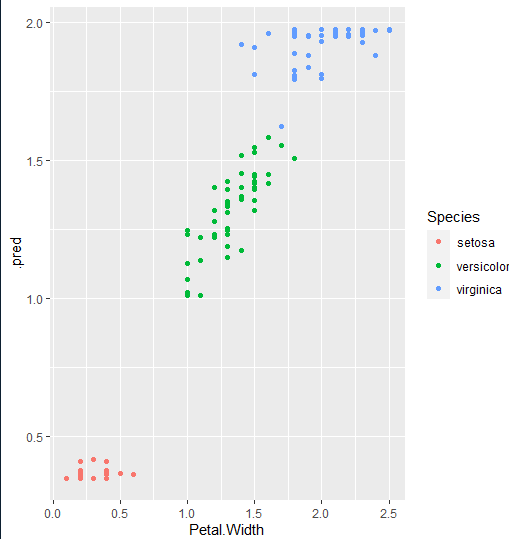

#Get the model-predicted values of the test set

pred_df <-

final_res %>%

collect_predictions() %>%

as.data.frame()

But now I'm a little confused on how to save this model so it can be re-run ? (I know normally there would be an extra re-training step on the entire dataset, which I'm skipping here).

parsed <- parse_model(extract_fit_engine(final_res)) #Is this right?

write_yaml(parsed, "my_model.yml")

loaded_model <- read_yaml("my_model.yml")

loaded_model <- as_parsed_model(loaded_model)

If this is correct, how would I fit it on the test set again? The same test set is fine as a toy. I thought it would be like this, but no luck:

loaded_model %>% fit(Petal.Width ~., data = iris)

Error in UseMethod("fit") :

no applicable method for 'fit' applied to an object of class "list"