##################

EDIT

##################

I haven't been able to find a solution using arrays with the apply function.

apply(X = array(data = unlist(c(women, women, women, women)), dim = c(2,15,4)), MARGIN = 3, FUN = mean)

I think some kind of transformation to the array may let me use the apply function and then revert it back. I'll update this if I figure it out.

##################

ORIGINAL

##################

Hi Rstudio Community,

I've run across an interesting problem (I'll preface this post with PEMI, please excuse my ignorance, which when I get sufficient privileges is going to be a tag on all my posts ![]() ).

).

I know how to run functions across rows and columns and data frames.

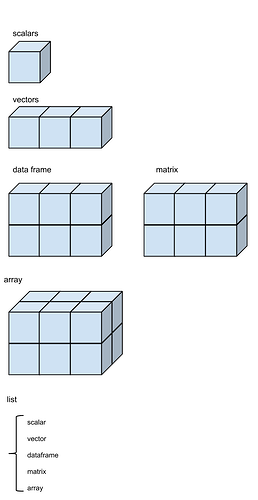

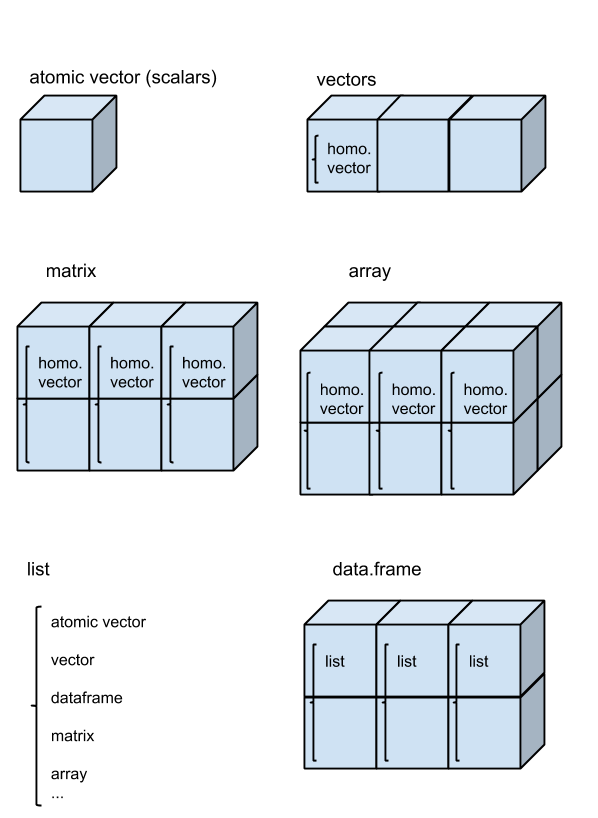

Is there a nice tidy way to run a function through data.frames and across scalars?

Maybe by converting the list of data.frames to an array?

If I've been unclear so far, let me try to demonstrate what I'm talking about. Where:

example:

women2 <- mice::ampute(data = women2, prop = 0.3)$amp

women2$weight

Amelia::amelia(x = women2, m = 100)$imputations

I want to take the mean of the same value across data.frame's? Meaning if:

>df1

a b c

d e f

g h i

>df2

j k l

m n o

p q r

>df3

s t u

v w x

y z aa

then what I want is:

df_1_3_FINAL

[mean(c(a,j,s))] [mean(c(b,k,t))] [mean(c(c,l,u))]

[mean(c(d,m,v))] [mean(c(e,n,w))] [mean(c(f,o,x))]

[mean(c(g,p,y))] [mean(c(h,q,z))] [mean(c(i,r,aa))]

I think this is a problem suited for an array, but I haven't dealt with arrays much, or ever seen some examples of arrays being used with dplyr (IMO, arrays are the forgotten options in R similar to the "Inverse Gaussian" distribution in GLM methods).

Does anyone have some alternative options for this kind of a problem?

PS

On a side note, is there a reason we don't use arrays more often? Aren't they supposed to have a smaller memory footprint? If the reason were for the cost of complexity when trying to manipulate data, I wonder if the tidy verse has some ways of simplifying their usage?