Routemaster

Authors: Noel Dempsey

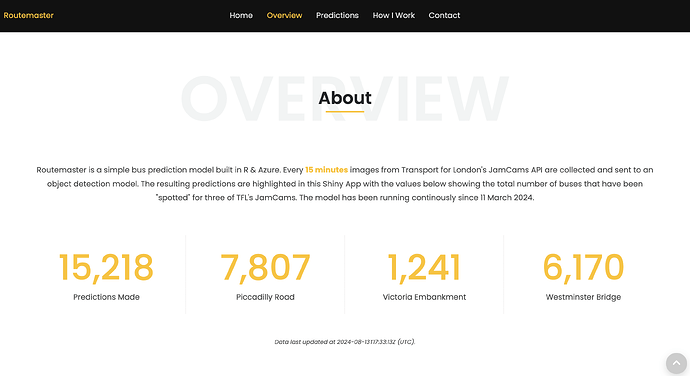

Abstract: Routemaster is a simple bus detection model built in R & Azure designed to detect the presence of London Buses within TFL’s JamCams API feed. It utilises serverless Azure custom handler functions to run R code on a schedule, an Azure custom vision object detection model and R Shiny/HTML template for the frontend.

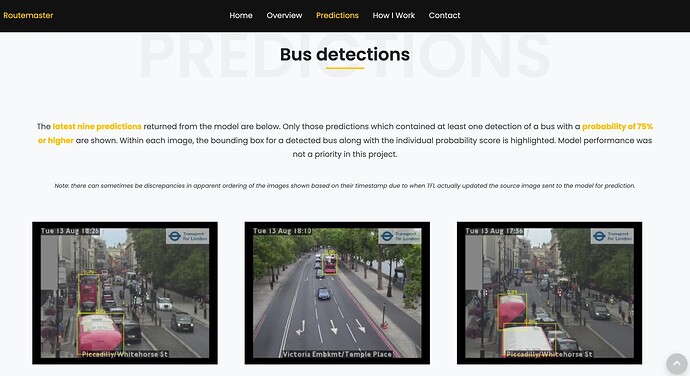

The Shiny frontend shows the number of buses detected by the model, the bounding box of the predicted detection overlayed with a still from the relevant camera, a full walkthrough on why and how I made Routemaster, as well as final thoughts on issues faced during its’ development and things I’d like to do differently.

Full Description: Routemaster is a simple bus prediction model built in R & Azure designed to detect the presence of London Buses within TFL’s JamCams API feed. It utilises serverless Azure custom handler functions to run R code on a schedule, an Azure custom vision object detection model and R Shiny/HTML template for the frontend.

In short, the creation of Routemaster can be broken down into four core steps: scheduling the capture and storage of images from TFL’s API; training an object detection model; scheduling the submission of images for prediction and storing results; using the prediction results in a frontend application.

Earlier in 2024 I had started Microsoft’s learning pathway towards the Azure AI Engineer Associate certificate, and within this I learnt more about Azure’s computer vision solutions as well as discovering a demo of its’ capabilities. Having never used Azure’s custom functions or Azure’s custom vision models, I wanted to create a small end-to-end project following a similar approach using my preferred language – R.

My only other self-imposed requirement was that I wanted to use real world data that was regularly updated or on a feed. Having previously used TFL’s APIs whilst at Parliament, I knew that they had free public endpoints which included their JamCam API. This API essentially exposes 10-second camera feeds and stills from 900+ traffic monitoring cameras from all over London with each camera updating roughly every five minutes.

From here I honed in on the idea of detecting buses within the images captured from the API largely as I thought it would be easier to build the object detection model on a form of traffic which is so identifiable – especially important with the small pixel count and low quality images from the camera feeds.

The result is a project which enabled me to develop some new skills, apply existing knowledge in different ways, and gain additional practical experience in implementing teachings from the Azure AI Engineer pathway - which I passed ![]()

Please see the comment below about the Cyber Security incident at TFL and their current restriction on the use of APIs.

Shiny app: https://routemastershinyapp.azurewebsites.net/

Repo: GitHub - dempseynoel/routemaster: A london bus prediction and detection model

Thumbnail:

Full image: