When you call functions like fit_resamples(), you need to add a pre-processor like a recipe or a formula:

Ksvm_refit <-

loo_kSVM_test %>%

fit_resamples(Y ~ X, data_loo)

Unfortuentely, we don't support LOOCV; it has fairly bad properties (both statistical and computational) and would require its won infrastructure. If you use it, you get the error:

! Leave-one-out cross-validation is not currently supported with tune.

I would use the bootstrap instead.

If you really need LOO, you could do it yourself though:

library(tidyverse)

library(tidymodels)

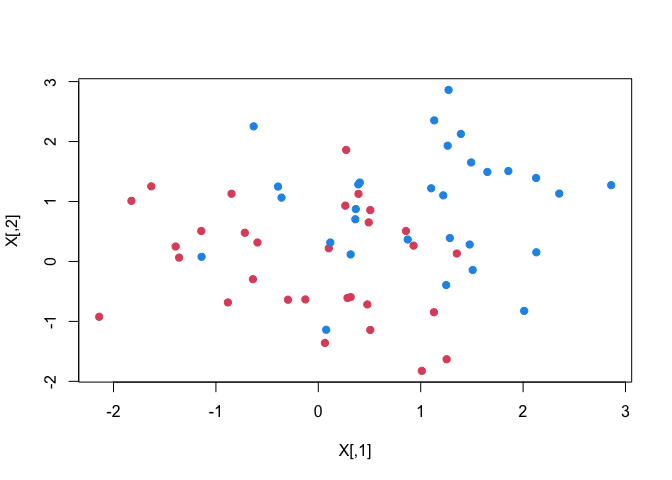

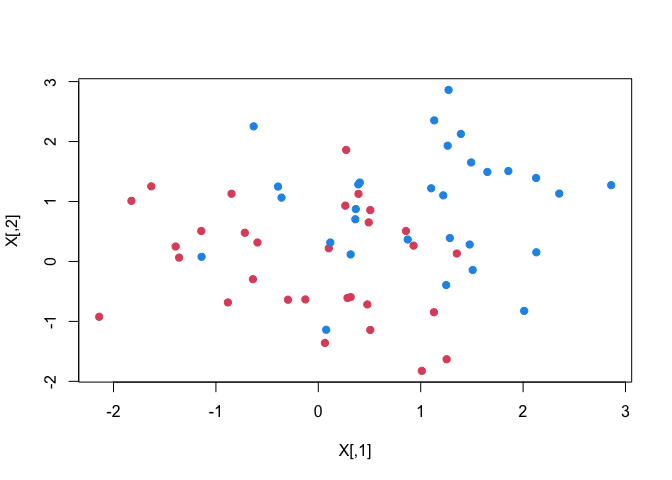

set.seed(10131)

X = matrix(rnorm(40), 60,2)

Y = rep(c(-1,1), c(30,30))

X[Y == 1,] = X[Y == 1,] + 1

plot(X, col = Y + 3, pch = 19)

dat = data.frame(X, Y = as.factor(Y))

dat_split = initial_split(dat, strata = Y)

trainD = training(dat_split)

testD = testing(dat_split)

data_loo = loo_cv(dat)

loo_kSVM_test <-

svm_linear(cost = 1) %>%

# This model can be used for classification or regression, so set mode

set_mode("classification") %>%

set_engine("kernlab")

data_loo_res <-

data_loo %>%

mutate(

fits = map(splits, ~ fit(loo_kSVM_test, Y ~ ., data = analysis(.x))),

predicted = map2(splits, fits, ~ predict(.y, assessment(.x), type = "prob"))

)

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

#> Setting default kernel parameters

all_pred <-

bind_rows(!!!data_loo_res$predicted) %>%

bind_cols(dat %>% select(Y))

roc_auc(all_pred, Y, `.pred_-1`)

#> # A tibble: 1 × 3

#> .metric .estimator .estimate

#> <chr> <chr> <dbl>

#> 1 roc_auc binary 0.512

Created on 2023-02-24 by the reprex package (v2.0.1)