I'm using Keras to build deep CNNs, and I want to include a set of channels to each layer that will act as a coordinate system for every point. That is, convolution operations will know "where" a pixel is by the activity that it sees at each point in the coordinate channels. Here is the code for creating the coordinate channels:

library(keras)

library(EBImage)

coordinates <- function(dim_x, dim_y) {

map_x <- replicate(dim_y, (0.5-dim_x/2):(dim_x/2-0.5)) * 2 / dim_x

map_y <- t(replicate(dim_x, (0.5-dim_y/2):(dim_y/2-0.5))) * 2 / dim_y

map_r <- sqrt(map_x^2 + map_y^2)

# Each channel highlights a single coordinate position (x, y, r).

num <- 2

sep <- 1 / num

maps <- array(data = 0, dim = c(dim_x, dim_y, 5 * num + 3))

c <- 0

for (x_pos in (-num:num)/num) {

c <- c + 1

maps[,,c] <- 1 - num * abs(map_x - x_pos)

}

for (y_pos in (-num:num)/num) {

c <- c + 1

maps[,,c] <- 1 - num * abs(map_y - y_pos)

}

for (r_pos in (0:num)/num) {

c <- c + 1

maps[,,c] <- 1 - num * abs(map_r - r_pos)

}

maps[maps < 0] <- 0

return(maps)

}

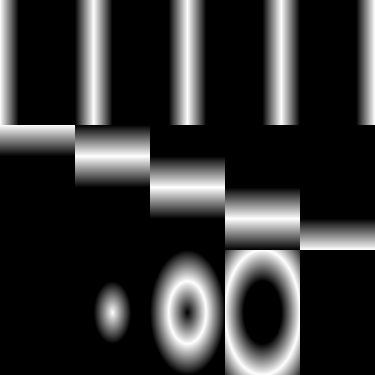

maps <- coordinates(75, 125)

img <- cbind(rbind(maps[,, 1], maps[,, 2], maps[,, 3], maps[,, 4], maps[,, 5]),

rbind(maps[,, 6], maps[,, 7], maps[,, 8], maps[,, 9], maps[,,10]),

rbind(0*maps[,,10], maps[,,11], maps[,,12], maps[,,13], 0*maps[,,13]))

EBImage::display(img)

Here's the output:

My problem is that I can't figure out how to insert this array into the network. The input to the network has shape (?, 75, 125, 3), and the coordinate channels have shape (75, 125, 13). After inserting the coordinates, it should become (?, 75, 125, 16). However, running the code below seems to lead to an infinite loop.

input <- layer_input(shape = c(75, 125, 3)) %>%

layer_lambda((function (x) k_concatenate(c(x, maps))))

Does the k_concatenate() function have a problem with 'maps' not being a Tensor? (Or not having a NULL first dimension?) If so, how should I address this? Would there be some way to sneak in the channels via an auxiliary input? Any help is appreciated.