I have been working on a project related to late arrival of aircrafts in 2019 across top 10 US airports. The dataset has more than 140000 observations. I have 9 attributes namely

FL_MONTH - varies from Jan to Dec

FL_DAY - weekday of the flight

CARRIER - has AA, DL, UA, WN (just the top 4 US aircraft carriers)

ORIGIN - top 10 airports (ATL,DEN,DFW, JFK,LAS,LAX,MCO,ORD,SEA,SFO)

DEP_DELAY - departure delay in minutes

ARR_DELAY - arrival delay in minutes

CANCELLED - 0: not cancelled, 1: cancelled

DIVERTED - 0: not diverted, 1: diverted

DELAY30_MINS - 0: not delayed more than 30 mins, 1: delayed more than 30 mins

I have been doing plots and modelling on it. I am predicting the accuracy of 4 attributes namely FL_MONTH, FL_DAY. CANCELLED and DIVERTED.

For predicting FL_MONTH and FL_DAY, I use rpart, j48, random forest in train()-->method.

For predicting CANCELLED and DIVERTED, I use rpart, glm, random forest and naive bayes in train()-->method.

Using a 75-25 train/test split, my code looks like the one below

#Using a 75% training and 25% testing split

month.rpart.fit = train(FL_MONTH ~ ., data = DF.train, method = "rpart", tuneLength = 5, trControl=trainControl(method = "cv", number = 10))

varImp(month.rpart.fit)

month.rpart.pred = predict(month.rpart.fit, DF.test)

confusionMatrix(month.rpart.pred, DF.test$FL_MONTH)

Here are my prediction results

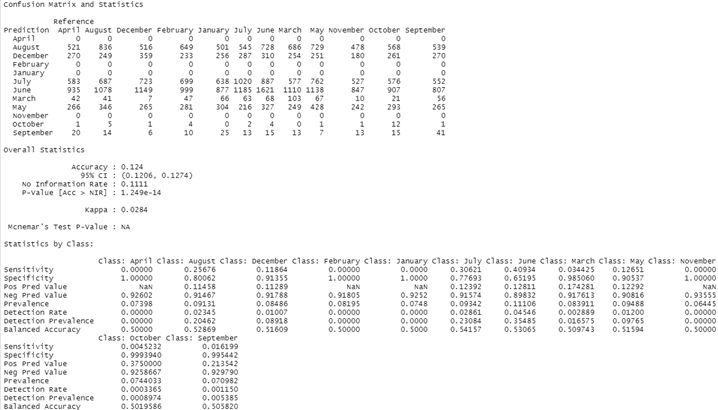

- The accuracy of the models when predicting FL_MONTH using 3 classifiers are around 12.25 - 12.4%

- The accuracy of the models when predicting FL_DAY using 3 classifiers are around 18.02 - 18.6%

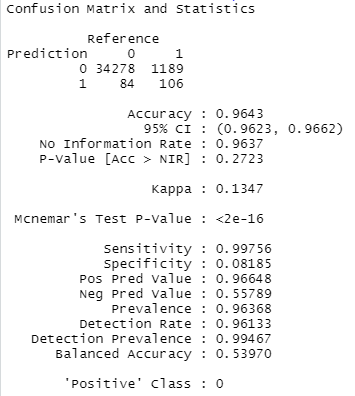

- The accuracy of the models when predicting CANCELLED using 4 classifiers are around 95 - 96%

- The accuracy of the models when predicting DIVERTED using 4 classifiers are around 99 - 99.4%

Here are the 2 issues which I am not able to understand/fix

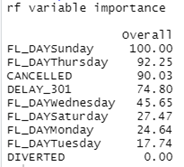

1st issue: Since FL_MONTH and FL_DAY are multi classification problems, I am using tree based classifiers and the accuracy is very poor. I am not sure on how to increase the accuracy. I tried training FL_MONTH against all other 8 class variables, then mix and matched with select few but the accuracy is always between 10 - 12%

Variable importance and confusion matrix for random forest when predicting FL_MONTH

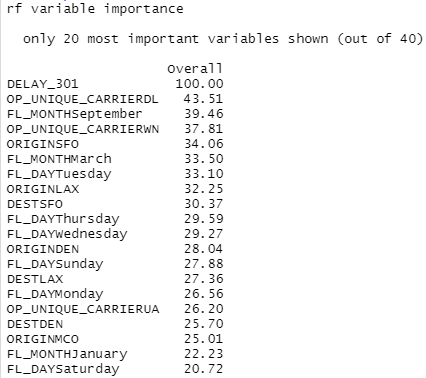

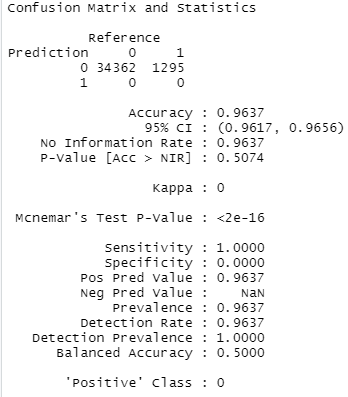

2nd issue: CANCELLED and DIVERTED predictions are good but for some reason the specificity is less than 10% using rpart and random forest and in 0 for glm and Naive Bayes. This makes Neg Pred Value NaN for the latter 2 cases.

Variable importance and confusion matrix for random forest when predicting CANCELLATIONS

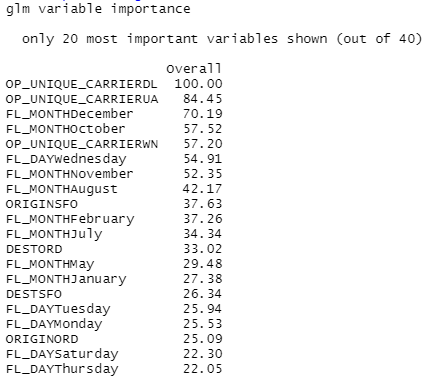

Variable importance and confusion matrix for glm when predicting CANCELLATIONS

Is there a way to know which class variables contribute to the accuracy as I am not able to understand how variable importance is being used specifically when it lists class values like LAX, LAS etc instead of just saying ORIGIN - 88% or something similar.

First 40 entries of my dataset (I remove FL_DATE column before training)

FL_DATE,FL_DAY,FL_MONTH,CARRIER,ORIGIN,DEP_DELAY,ARR_DELAY,CANCELLED,DIVERTED,DELAY30_MINS

1/1/2019,Tuesday,January,AA,LAX,21,12,0,0,0

1/1/2019,Tuesday,January,AA,SFO,0,8,0,0,0

1/1/2019,Tuesday,January,AA,JFK,0,20,0,0,0

1/1/2019,Tuesday,January,AA,DFW,27,39,0,0,0

1/1/2019,Tuesday,January,AA,LAX,0,1,0,0,0

1/1/2019,Tuesday,January,AA,DEN,0,14,0,0,0

1/1/2019,Tuesday,January,AA,JFK,23,40,0,0,0

1/1/2019,Tuesday,January,AA,SFO,12,24,0,0,0

1/1/2019,Tuesday,January,AA,LAS,15,6,0,0,1

1/1/2019,Tuesday,January,AA,DFW,38,10,0,0,0

1/1/2019,Tuesday,January,AA,SEA,0,24,0,0,1

1/1/2019,Tuesday,January,AA,ORD,31,28,0,0,0

1/1/2019,Tuesday,January,AA,LAX,4,5,0,0,0

1/1/2019,Tuesday,January,AA,DFW,22,9,0,0,0

1/1/2019,Tuesday,January,AA,ORD,6,2,0,0,0

1/1/2019,Tuesday,January,AA,DFW,3,12,0,0,1

1/1/2019,Tuesday,January,AA,ORD,120,128,0,0,1

1/1/2019,Tuesday,January,AA,ORD,37,21,0,0,1

1/1/2019,Tuesday,January,AA,DFW,36,16,0,0,0

1/1/2019,Tuesday,January,AA,DFW,9,1,0,0,1

1/1/2019,Tuesday,January,AA,ORD,48,46,0,0,0

1/1/2019,Tuesday,January,AA,LAX,0,10,0,0,0

1/1/2019,Tuesday,January,AA,ORD,20,27,0,0,1

1/1/2019,Tuesday,January,AA,LAX,35,17,0,0,0

1/1/2019,Tuesday,January,AA,DFW,0,12,0,0,0

1/1/2019,Tuesday,January,AA,LAS,0,8,0,0,0

1/1/2019,Tuesday,January,AA,LAX,17,19,0,0,1

1/1/2019,Tuesday,January,AA,DFW,51,41,0,0,1

1/1/2019,Tuesday,January,AA,LAS,39,37,0,0,0

1/1/2019,Tuesday,January,AA,LAX,12,14,0,0,1

1/1/2019,Tuesday,January,AA,LAS,84,71,0,0,1

1/1/2019,Tuesday,January,AA,LAX,94,107,0,0,1

1/1/2019,Tuesday,January,AA,ORD,84,68,0,0,0

1/1/2019,Tuesday,January,AA,DFW,3,12,0,0,1

1/1/2019,Tuesday,January,AA,ORD,124,118,0,0,0

1/1/2019,Tuesday,January,AA,ORD,13,20,0,0,0

1/1/2019,Tuesday,January,AA,LAS,0,6,0,0,0

1/1/2019,Tuesday,January,AA,ORD,155,158,0,0,1

1/1/2019,Tuesday,January,AA,ATL,50,15,0,0,1

1/1/2019,Tuesday,January,AA,LAX,54,34,0,0,1

Note: rpart and glm are finish in less than 10 mins while random forest takes around 45 mins. Naive Bayes takes atleast 3.5 hrs to finish. I am sort of surprised by the computation time taken for certain algorithms.