Hey guys, I'm doing a project where I analyse Trumps speeches (text analysis).

My code looks like this:

# Read the files in

# lapply function returns a list the same length as the txt_files_ls

# Create a dataframe by reading in the table

# Set the header to "F" as we will be adding this in later

# Separate the data using "sep="\t"" this means the data is tab delimited and from seperate documents

# read.table("file.txt", header=T/F, sep="\t") is an alternative to read.delim

txt_files_df_list <- lapply(txt_files_ls, function(x) {data.frame(read.table(file = x, header = F, sep ="\t", colnames(x)))})

# Combine them and set the column name to speech using the setName function

# The do.call function constructs and executes a function call from a name or function in this case "r.bind"

combined_df <- setNames(do.call("rbind", txt_files_df_list),

c("Speech"))

# Create an R object for the locations of speeches, listing them in the same order as they were inputted into the list

location <- c("Bemidji", "Fayetteville", "Freeland", "Henderson", "Latrobe", "Minden", "Mosinee", "Ohio", "Pittsburgh", "Winston-Salem" )

# Using the dplyr package and the function mutate add in the new R object of the locations and create a new dataframe

combined_df_2 <- mutate(combined_df, Location= location)

# Create an R object for the dates of the speeches extracted from the file titles, place them in the same order as they were inputted into the list

date <- c("2020-09-18", "2020-09-19", "2020-09-10", "2020-09-13", "2020-09-03", "2020-09-12", "2020-09-17", "2020-09-21", "2020-09-22", "2020-09-08")

# Transform the data into date data using the as_date function and adding the format of which the date is written

date_2<- lubridate::as_date(date, '%Y-%m-%d')

# Again using the dplyr package and the mutate function add in the new R object of the dates with the new format of data

combined_df_3 <- mutate(combined_df_2, Date=date_2)

# Seeing the structure of the combined dataset to check that the speech and location columns are characters and the date column is date

str(combined_df_3)

view(combined_df_3)

My question is how would I break the text in to individual tokens and transform it to a tidy data structure.

How would I tokenize the dialogue, splitting each sentence in separate words?

When I try to do it myself with the code:

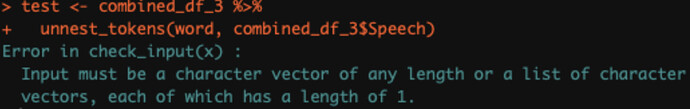

test_df <- combined_df_3 %>%

unnest_tokens(word, combined_df_3$Speech)

I get the error :

Any guidance would be appreciated!

Also, if there's a way to somehow make my original code smaller, where I extract the name and date of the file name and put them into individual columns which contains the content of file(Speech), Location and date columns. That would also be helpful!