I'm looking to automate the download of a data file from a web site, which will prevent users of my package from needing to do this step manually (and prevent me from needing to describe how to find it/where to put it).

I'm familiar with webscraping using the 'rvest' package, however, this problem is different because the data are contained in a data file that is downloaded after the user clicks a link on the webpage -- this is what I'm trying to automate. Does anyone know of a way to do this from R? I'd be open to command line solutions as well.

Here are instructions to get the data file manually:

Navigate to the webpage with the data: https://www.adfg.alaska.gov/index.cfm?adfg=commercialbyareakuskokwim.btf

Scroll down and click the "Daily CPUE All Species" banner:

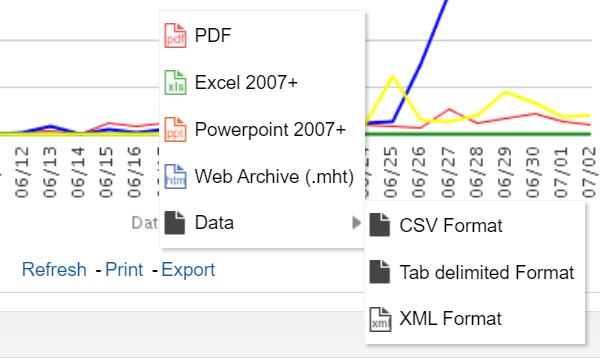

Scroll to the bottom to find the "Export" > "Data" > "CSV Format":

Upon clicking that, the data file is downloaded -- anyone know how to automate these steps?

I've tried contacting the maintainers of the website, however they were unable to provide me with any advice about how to automate this.

Thank you in advance for any insights you might have!