Hi, R community! Thanks in advance for considering my enquiry.

I want to write a script that does the following job below.

- Input files: 1115 XML files saved in my directory (tagged for 39 features)

- Operation: repeat counting each of 46 features in each XML file

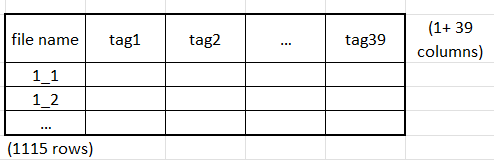

- Output: a frequency table looking like the one below

So far, I have the script below using the package ‘xml2’. The script both finds each of the features and tells me how many times they appear. For example, in the script below, I wanted to count the frequency of ‘tag1’ in the XML file named as ‘1_1’. The ‘tag1’ feature appears within dependency sections in the XML files.

setwd('~/my directory/')

install.packages('xml2')

library(xml2)

text <- read_xml(x = '1_1.xml')

# find dependency sections

dependencies <- xml_find_all(text, './/dependencies')

# find '<dep...> tags

deps <- xml_find_all(collapsed, './/dep')

# find tags for the features you are interested in, e.g.: ‘tag1’

tag1 <- deps[grep('type="tag1"', deps)]

N_tag1- length(tag1)

The code above needs improvement for two aspects.

First of all, the code above does not repeat the job for each of the 39 tags (e.g., 'tag1’) in 1,115 XML texts.

So the code needs to be written so that R repeats counting each of 39 tags in each of 1,115 XML texts at one go or a much-reduced number of scripts than the script above.

I think that the ‘For loop’ function might do the job, but I don’t know how to rewrite the script using it.

Second, the frequencies of each tag (e.g., ‘tag1’) needs to be assembled in a frequency table as an output. I have no idea about the function that can do that once the frequencies of the tags are counted in all the texts.

Any suggestions will be much appreciated. Thanks for reading this question ![]()