I've encountered this a few times and I'm trying to figure out the best way to deal with it.

At work we get a lot of files in excel format. We also have a bunch of historical data in this format as well that I'm trying to compile. One issue I've noticed is a lot of data has weird significant figure issues. So for example in excel a field my look like 0.007 but when I start processing it I'll find out it really looks like this: 7.0000000000000001E-3. I'm using readxl to access the data, but this is't an issue with that package because when I edit xml in the excel file it actually looks like this:

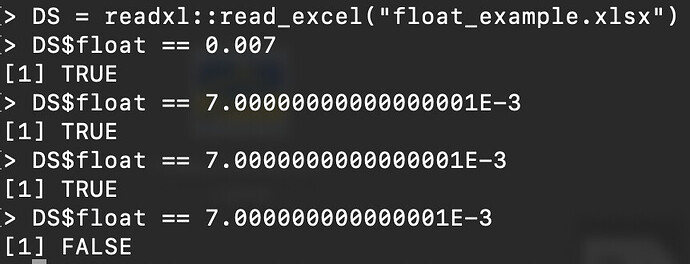

As you can see here I can test these things numerically and there is no difference beyond the 15th decimal place:

So numerically these things aren't issues, but when I construct shiny apps around these data they end up with all these weird trailing 000...01s and 999999s (it happens the other way as well).

This is clearly an artifact of excel, but it causes a couple issues for me. One is visual aesthetics for end users. The other is automated QCing of data.

What I've been doing is kind of forcing rounding using significant figures. It seems to work in this case but I think it's probably not the best way to deal with it.

I'm wondering if anyone has experienced this and how thy deal with it. Alternatively, if anyone has any thoughts on how to deal with this?