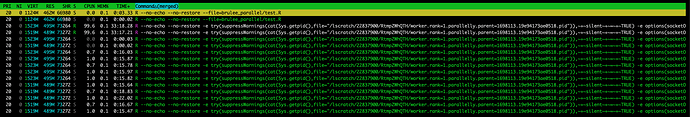

I am trying to run a neural network model using brulee. If running in parallel, I got the following error messages.

libgomp: Thread creation failed: Resource temporarily unavailable

Error in unserialize(node$con) :

MultisessionFuture (doFuture-3) failed to receive message results from cluster RichSOCKnode #3 (PID 262238 on localhost ‘localhost’). The reason reported was ‘error reading from connection’. Post-mortem diagnostic: No process exists with this PID, i.e. the localhost worker is no longer alive. The total size of the 39 globals exported is 1.41 MiB. The three largest globals are ‘fn_tune_grid_loop_iter’ (356.12 KiB of class ‘function’), ‘predict_model’ (261.09 KiB of class ‘function’) and ‘metrics’ (167.09 KiB of class ‘function’)

libgomp: Thread creation failed: Resource temporarily unavailable

Here is the codes I am trying to run.

# Model specification

brulee_spec <-

mlp(hidden_units = tune(),

penalty = tune(),

epochs = tune()) |>

set_engine("brulee") |>

set_mode("classification")

# Parallel backend

library(doFuture)

registerDoFuture()

parallelly::availableCores()

plan(multisession, workers = 12)

# Tunning

tune_res <- tune_grid(

brulee_wf,

grid = param_grid,

resamples = ten_fold_cv,

metrics = metric_set(accuracy, bal_accuracy, j_index, mn_log_loss, brier_class, roc_auc, kap, mcc)

)

Using the same code, if replacing the engine with "nnet", everything went very well.

If remove the parallel backend, i.e., run it sequentially, a warning will display as follows:

→ A | warning: Current loss in NaN. Training wil be stopped.

There were issues with some computations A: x5