Thank you. In short, there are other previous codes that may be important for you to understand.

First, we have this code:

--------------------------------------------------------------

---------- Get Iteration Number ------------------------------

--------------------------------------------------------------

!/usr/bin/env Rscript

iter <- commandArgs(trailingOnly=TRUE)

print(iter)

iter <- as.numeric(iter)

----------------------------------------------------------------------

----- Load Packages --------------------------------------------------

----------------------------------------------------------------------

Panic Model Sim

library(PanicModel)

Parallel

library(foreach)

library(parallel)

library(doParallel)

----------------------------------------------------------------------

----- Specify Treatments ---------------------------------------------

----------------------------------------------------------------------

Updated based on info from Don on June 15

l_tx <- list()

baseline_weeks <- 0

baseline_days <- 1

Control group (no intervention)

l_tx[[1]] <- list("I1" = NULL, # Psychoeducation -> AS

"I2" = NULL, # Psychoeducation -> ES

"I3" = NULL, # Cognitive restructuring -> AS

"I4" = NULL, # Interoceptive Exposure -> A, E

"I5" = NULL) # In vivo exposure -> Context

Cognitive

l_tx[[2]] <- list("I1" = baseline_days+baseline_weeks7+c(1), # Psychoeducation -> AS

"I2" = baseline_days+baseline_weeks7+c(1), # Psychoeducation -> ES

"I3" = (baseline_days+baseline_weeks+(1:4)*7)+c(1), # Cognitive restructuring -> AS

"I4" = NULL, # Interoceptive Exposure -> A, E

"I5" = NULL) # In vivo exposure -> Context

Behavioral

l_tx[[3]] <- list("I1" = baseline_days+baseline_weeks7+c(1), # Psychoeducation -> AS

"I2" = baseline_days+baseline_weeks7+c(1), # Psychoeducation -> ES

"I3" = NULL, # Cognitive restructuring -> AS

"I4" = baseline_days+c((baseline_weeks+1)*7+1:28), # Interoceptive Exposure -> A, E

"I5" = baseline_days+(baseline_weeks+3)*7+(1:14)[rep(c(TRUE,TRUE), 7)]) # In vivo exposure -> Context

Cognitive + Behavioral

l_tx[[4]] <- list("I1" = baseline_days+baseline_weeks7+c(1), # Psychoeducation -> AS

"I2" = baseline_days+baseline_weeks7+c(1), # Psychoeducation -> ES

"I3" = (baseline_days+baseline_weeks+(1:2)*7)+c(1), # Cognitive restructuring -> AS

"I4" = baseline_days+c((baseline_weeks+1)*7+1:28), # Interoceptive Exposure -> A, E

"I5" = baseline_days+(baseline_weeks+3)*7+(1:14)[rep(c(TRUE,TRUE), 7)]) # In vivo exposure -> Context

NEW Cognitive + Behavioral [with focus on Escape]

l_tx[[5]] <- list("I1"=baseline_days+baseline_weeks7+c(1),

"I2"=(baseline_days+baseline_weeks+(0:2)7)+c(1), # Psychoeducation + Self-efficacy Training --> ES: Session 1-3

"I3"=NULL, # Cog Restructure --> AS: NULL

"I4"=(baseline_days+baseline_weeks7)+7+(1:(74)),

"I5"=baseline_days+(baseline_weeks+3)*7+(1:14)[rep(c(TRUE,TRUE), 7)])

----------------------------------------------------------------------

----- Iterate --------------------------------------------------------

----------------------------------------------------------------------

----- Iterating -----

Setup Parallelization

cluster <- 16

cl <- makeCluster(cluster, outfile="")

registerDoParallel(cl)

timer_total <- proc.time()[3]

set.seed(iter)

out_iter <- foreach(batch = 1:16,

.packages = c("PanicModel"),

.export = c("l_tx"),

.verbose = TRUE) %dopar% {

# ----- Inter-individual differences / initial values ------

# Arousal Schema & Escape Schema initial values

N <- 20 # making sure at least one is valid (see below)

AS_init <- rnorm(n=N, .8, .1) # creates ~20% panic attacks (matched to lifetime prevalence)

AS_initth <- AS_init[AS_init<=1 & AS_init>=0]

AS_init <- AS_initth[1]

ES_init <- rnorm(n=N, .2, .1)

ES_initth <- ES_init[ES_init<=1 & ES_init>=0]

ES_init <- ES_initth[1]

initial_spec <- list("S" = AS_init,

"X" = ES_init)

# ----- Treatment response parameters -----

# From Don: 28th April:

pars_spec <- list("Tx" = list("I123_alpha" = rbeta(n=1, 1, 9),

"I4Adh" = rbeta(n=1, 3, 2),

"I4RdEs" = rbeta(n=1, 3, 2)))

# ----- Simulate ------

time0 <- 0:(60*24*7*4) # 4 week baseline

# Simulate

out_0 <- simPanic(time = time0,

stepsize = .001,

parameters = pars_spec,

initial = initial_spec,

pbar = FALSE)

# Round to 3 decimals to save disk space

out_0$outmat <- round(out_0$outmat, 3)

# --- Feed last values of baseline in as initial values ---

last_row <- out_0$outmat[nrow(out_0$outmat), ]

initial_spec2 <- list("A" = last_row$A,

"S" = last_row$S,

"H" = last_row$H,

"PT" = last_row$PT,

"E" = last_row$E,

"X" = last_row$X)

# --- make batch specific seed ----

seed_batch <- as.numeric(paste0(iter, 999, batch))

# ----- Loop through 4 Treatment arms -----

l_out <- list()

time1 <- 0:(60*24*7*(5+12)) # 5 weeks treatment + 12 weeks follow up

for(group in 1:5) {

# ----- Simulate Control vs. Treatments -----

set.seed(seed_batch) # random input constant across treatment arms/groups

# Simulate

out_x <- simPanic(time = time1,

stepsize = .001,

parameters = pars_spec,

initial = initial_spec2,

tx = l_tx[[group]],

pbar = FALSE)

# Round to 3 decimals to save disk space

out_x$outmat <- round(out_x$outmat, 3)

l_out[[group]] <- out_x

} # end for: group

# ----- Return ------

outlist <- list("heter" = list("S" = AS_init,

"X" = ES_init,

"I123_alpha" = pars_spec$Tx$I123_alpha,

"I4Adh" = pars_spec$Tx$I4Adh,

"I4RdEs" = pars_spec$Tx$I4RdEs),

"out_baseline" = out_0,

"out_group1" = l_out[[1]],

"out_group2" = l_out[[2]],

"out_group3" = l_out[[3]],

"out_group4" = l_out[[4]],

"out_group5" = l_out[[5]],

"batchseed" = seed_batch)

return(outlist)

} # end foreach

print total time of nodes

print(paste0("Full Timing Iteration ", iter, ":"))

proc.time()[3] - timer_total

stopCluster(cl)

----------------------------------------------------------------------

----- Export ---------------------------------------------------------

----------------------------------------------------------------------

Save

saveRDS(out_iter, file = paste0("IntSim6_Iter", iter, ".RDS"))

This code creates minute-to-minute data. After running, this code will generate an RDS File (for an example of an RDS File please see: OSF).

Thus, when running the code I gave you, called sim_preprocess.R, which contains functions to map the minute-to-minute data of components, it is suppose to convert it to weekly symptom scores, and create other processed output. To remind, this the code sim_preprocess.R:

o.ryan@umcutrecht.nl; 20 July

This file process the raw simulated output found in the /simcode/ folder

As output it creates two files

outfiles.RDS - a list containing baseline and treatment group daily measures

# as well as information about treatment adherence and heterogeneity

symptoms_out.RDS - a list containing symptom measurements

-------------------------------------------------------------------------

----------------- Output 1: outfiles.RDS --------------------------------

-------------------------------------------------------------------------

library(abind)

source("symptom_functions.R")

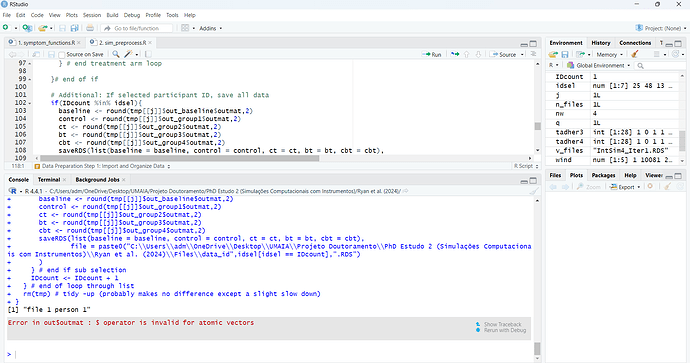

Data Preparation Step 1: Import and Organize Data -----

l_all <- list()

dir <- "Simulation/output/"

v_files <- list.files(dir)

n_files <- length(v_files)

symptoms <- list(baseline = list(), g1 = list(), g2 = list(),

g3 = list(), g4 = list(), g5 = list())

each file contains ~16 "people"

for each person we have a multivariate time series for

baseline + 5 treatment arms

we also have adherence rate (for treatments 3,4 and 5 only)

and a vector of between-person variable values (heter)

we don't need to extract anything else

IDcount <- 1

extract full information for two selected individuals

idsel <- c(25,55,133,207,429,134,168)

first 5 are bt responders, last two are ct responders

for(i in 1:n_files){

tmp <- readRDS(paste0(dir, "IntSim6_Iter", i, ".RDS"))

for(j in 1:length(tmp)){

if(IDcount < 501){

print(paste("file", i, "person",IDcount))

theter <- as.data.frame(tmp[[1]]$heter)

# round for size, take the sequence to reflect daily measurements

tout_baseline <- round(tmp[[j]]$out_baseline$outmat,2)[seq(1,nrow(tmp[[1]]$out_baseline$outmat), by = 60*24),]

tout_group1 <- round(tmp[[j]]$out_group1$outmat,2)[seq(1,nrow(tmp[[1]]$out_group1$outmat), by = 60*24),]

tout_group2 <- round(tmp[[j]]$out_group2$outmat,2)[seq(1,nrow(tmp[[1]]$out_group2$outmat), by = 60*24),]

tout_group3 <- round(tmp[[j]]$out_group3$outmat,2)[seq(1,nrow(tmp[[1]]$out_group3$outmat), by = 60*24),]

tout_group4 <- round(tmp[[j]]$out_group4$outmat,2)[seq(1,nrow(tmp[[1]]$out_group4$outmat), by = 60*24),]

tout_group5 <- round(tmp[[j]]$out_group5$outmat,2)[seq(1,nrow(tmp[[1]]$out_group5$outmat), by = 60*24),]

# add an "ID" indicator everywhere

tout_baseline$ID <- tout_group1$ID <- tout_group2$ID <- tout_group3$ID <- tout_group4$ID <- tout_group5$ID <- IDcount

# tadher1 <- tmp[[j]]$out_group1$adher;

# tadher2 <- tmp[[j]]$out_group2$adher

tadher3 <- tmp[[j]]$out_group3$adher

tadher4 <- tmp[[j]]$out_group4$adher

tadher5 <- tmp[[j]]$out_group5$adher

theter <- as.data.frame(tmp[[j]]$heter); theter$ID <- IDcount

# if this is the first entry, just create output objects

if(i ==1 && j == 1){

heter <- theter

out_baseline <- tout_baseline ; out_group1 <- tout_group1 ; out_group2 <- tout_group2

out_group3 <- tout_group3; out_group4 <- tout_group4 ; out_group5 <- tout_group5

adher3 <- tadher3

adher4 <- tadher4

adher5 <- tadher5

}else{ # otherwise, bind output objects together

heter <- rbind(heter,theter)

out_baseline <- rbind(out_baseline, tout_baseline)

out_group1 <- rbind(out_group1, tout_group1)

out_group2 <- rbind(out_group2, tout_group2)

out_group3 <- rbind(out_group3, tout_group3)

out_group4 <- rbind(out_group4, tout_group4)

out_group5 <- rbind(out_group5, tout_group5)

adher3 <- rbind(adher3, tadher3)

adher4 <- rbind(adher4, tadher4)

adher5 <- rbind(adher5, tadher5)

}

Create Symptoms Output

for(q in 1:6){

dframe <- tmp[[j]][[1+q]]$outmat

wind <- seq(1,nrow(dframe), 10080)

nw <- length(wind) -1 # number of weeks

weeks <- cbind(wind[1:nw], wind[2:(nw +1)])

outid <- cbind(t(apply(weeks,1,function(r){

getSymptoms(dframe[r[1]:r[2],])

})),IDcount)

colnames(outid)[1:11] <- c("q1_panic", "q2_padistress", "q3_fear", "q4_avoid","q5_context", "sumscore",

"n_panic","q2_distress_cont", "q3_fear_cont", "q4_avoid_cont", "q5_context_cont")

symptoms[[q]][[IDcount]] <- outid

} # end treatment arm loop

}# end of if

# Additional: If selected participant ID, save all data

if(IDcount %in% idsel){

baseline <- round(tmp[[j]]$out_baseline$outmat,2)

control <- round(tmp[[j]]$out_group1$outmat,2)

ct <- round(tmp[[j]]$out_group2$outmat,2)

bt <- round(tmp[[j]]$out_group3$outmat,2)

cbt <- round(tmp[[j]]$out_group4$outmat,2)

saveRDS(list(baseline = baseline, control = control, ct = ct, bt = bt, cbt = cbt),

file = paste0("Files/data_id",idsel[idsel == IDcount],".RDS")

)

} # end if sub selection

IDcount <- IDcount + 1

} # end of loop through list

rm(tmp) # tidy -up (probably makes no difference except a slight slow down)

}

n_id <- IDcount - 1

now merge some of these files together into arrays

out_treat <- abind(out_group1,out_group2, out_group3, out_group4,out_group5, along = 3)

adher <- list(NULL, NULL,adher3,adher4, adher5)

save files

saveRDS(list(out_baseline = out_baseline,

out_treat = out_treat,

adher = adher,

heter = heter),

file = "Files/outfiles.RDS")

save symptom file

saveRDS(symptoms, file = "Files/symptoms_out.RDS")

rm(list=ls())