Dear all,

Thank you for looking into my post. The United Nations Security Council produces resolution after resolution. I'd like to perform some basic text-mining tasks on the resolutions adopted between 2000 and 2018. Which means I'd like to download the document signatures S/RES/1285:S/RES/2246.

My challenge:

I would like to

- Download a series of plain text files with increment numbers

- Store them in a list or a df where three hrefs are in columns and each resolution is one row

My Data

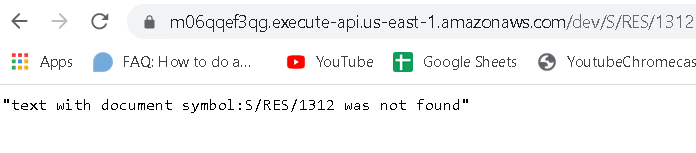

The series of text files is stored here: [https://m06qqef3qg.execute-api.us-east-1.amazonaws.com/dev/S/RES/]

The same content is inconveniently stored as a html web content here

Each document has a sequential number associated which is at the end of the URL.

E.g., Document S/RES/1285

URL <- "https://m06qqef3qg.execute-api.us-east-1.amazonaws.com/dev/S/RES/1285"

URL # plain text of the resolution

I'd like to the files 1285:2446 from this list (basically the pendant to Curl

https://m06qqef3qg.execute-api.us-east-1.amazonaws.com/dev/S/RES/[1285-2446] -o "#1.txt)

My failed attempts to solve challenge 1: Download and store sequential urls

In the forum, I found this approach and adapted it to my case

Packages used

library(tidyverse)

library(rvest)

Attempt a

mandate <- list()

inshows = c(1285:2446) # sequence of resultions

for(u in inshows) {

url <- paste0('https://m06qqef3qg.execute-api.us-east-1.amazonaws.com/dev/S/RES/', u)

mandate[[u]] <- read_html(url)

}

I also tried a modification of this post

Attempt b

mandates <- lapply(paste0('https://m06qqef3qg.execute-api.us-east-1.amazonaws.com/dev/S/RES/', 1285:2446),

function(url){

url %>% read_html() %>%

})

Rather unsurprising both create empty lists of 1651. Where would the text come from in this code?

If anyone knows how to iterate over the sequence and download the list, I would greatly appreciate help!

The resolutions are not stored as txt file nor are they still in html (and hence with href). Therefore I am wondering if I am all together on the wrong track here.

Challenge 2: Store the downloaded documents tidy

In order to work with the text, I need them either in a list or in a dataframe.

Ideally, the corpus would be structured along the hrefs (included as patterns in the plain text)

- "doc_sym", # contains the document signature (S/RES/1285(2000)

- "raw_txt", # contains the text body of the document

- "title". # contains the title "Security Council resolution 1285 [2000) [on monitoring of the demilitarization of the Prevlaka peninsula by the UN military observers]"

- Year, in parenthesis in soc_sym (no href)

I have absolutely no idea how to perform step two and am open to any and all suggestions.

Thank you very much!

Best,

Martin