Part 1: Imagine a rectangular world. This rectangular world is actually a network graph made of 1000 nodes, such that each node is only connected to all of its immediate neighbors only once. The nodes in the graph have id's from 1 to 1000, and each node is assigned a random value to represent the population at that point. Here is how everything looks like:

library(igraph)

width <- 30

height <- 20

num_nodes <- width * height

# Create a grid

x <- rep(1:width, each = height)

y <- rep(1:height, times = width)

g <- make_empty_graph(n = num_nodes, directed = FALSE)

# Function to get node index

get_node_index <- function(i, j) (i - 1) * height + j

# Add edges

edges <- c()

for(i in 1:width) {

for(j in 1:height) {

current_node <- get_node_index(i, j)

# Connect to right neighbor

if(i < width) edges <- c(edges, current_node, get_node_index(i + 1, j))

# Connect to bottom neighbor

if(j < height) edges <- c(edges, current_node, get_node_index(i, j + 1))

}

}

g <- add_edges(g, edges)

V(g)$x <- x

V(g)$y <- y

par(mfrow=c(1,2))

V(g)$name <- 1:num_nodes

plot(g, vertex.size = 7, vertex.label = V(g)$name, vertex.label.cex = 0.6, main = "Map with Node Indices")

V(g)$value <- sample(1:100, num_nodes, replace = TRUE)

plot(g, vertex.size = 7, vertex.label = V(g)$value, vertex.label.cex = 0.6, main = "Map with Population Values")

Part 2: Here is a function that finds the 4 nodes in the original network having the largest node sum:

sg <- subgraph_isomorphisms(make_ring(4), g)

lst <- unique(lapply(sg, \(x) sort(names(x))))

out <- do.call(

rbind,

lapply(

lst,

\(v) data.frame(

node_id = toString(v),

value = sum(V(induced_subgraph(g, v))$value)

)

)

)

Part 3: I then wrote a function to split the original network into 4 mini networks:

get_node_index <- function(i, j) (i - 1) * height + j

select_square_nodes <- function(g, x_start, x_end, y_start, y_end) {

nodes <- c()

for (i in x_start:x_end) {

for (j in y_start:y_end) {

nodes <- c(nodes, get_node_index(i, j))

}

}

return(nodes)

}

square1 <- select_square_nodes(g, 1, width/2, 1, height/2)

square2 <- select_square_nodes(g, (width/2) + 1, width, 1, height/2)

square3 <- select_square_nodes(g, 1, width/2, (height/2) + 1, height)

square4 <- select_square_nodes(g, (width/2) + 1, width, (height/2) + 1, height)

selected_nodes_list <- list(square1, square2, square3, square4)

plot_subgraph <- function(g, nodes, title) {

subg <- induced_subgraph(g, nodes)

plot(subg,

main = title,

vertex.size = 10,

vertex.label = V(subg)$name,

vertex.label.cex = 0.6)

}

par(mfrow = c(2, 2), mar = c(1, 1, 3, 1))

for (i in 1:length(selected_nodes_list)) {

plot_subgraph(g, selected_nodes_list[[i]], paste("Subgraph", i))

}

This the problem I want to solve: I want to use parallel computing to find out the 4 nodes in the original network with the largest node sum. I thought I could use parallel computing so that the different cores of my computer can each be assigned one of these mini networks - and within each network, the 4 nodes with largest sum can be identified. From here, I can then pick the largest sum:

library(parallel)

find_largest_sum <- function(nodes, g) {

subg <- induced_subgraph(g, nodes)

sg <- subgraph_isomorphisms(make_ring(4), subg)

lst <- unique(lapply(sg, \(x) sort(names(x))))

out <- do.call(

rbind,

lapply(

lst,

\(v) data.frame(

node_id = toString(v),

value = sum(V(induced_subgraph(subg, v))$value)

)

)

)

return(out[which.max(out$value), ])

}

cl <- makeCluster(4)

clusterExport(cl, c("g", "find_largest_sum", "selected_nodes_list", "induced_subgraph", "make_ring", "subgraph_isomorphisms", "V"))

results <- parLapply(cl, selected_nodes_list, find_largest_sum, g)

stopCluster(cl)

print(results)

[[1]]

node_id value

114 246, 247, 266, 267 352

[[2]]

node_id value

13 324, 325, 344, 345 340

[[3]]

node_id value

39 113, 114, 93, 94 369

[[4]]

node_id value

118 571, 572, 591, 592 331

Based on this, I thought we could conclude that nodes "246, 247, 266, 267" have the largest sum on the original network.

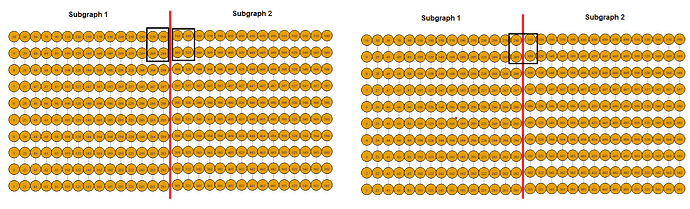

I thought everything was good - until I thought about the following problem! When splitting the network, won't we lose information in the process? Certain squares (i.e. those on the boundaries) will never be considered for comparison:

Can someone please suggest a way to solve this problem?