Hi,

I am just reviewing calibration in tidy-models and I just want to confirm my understanding of when to use it.

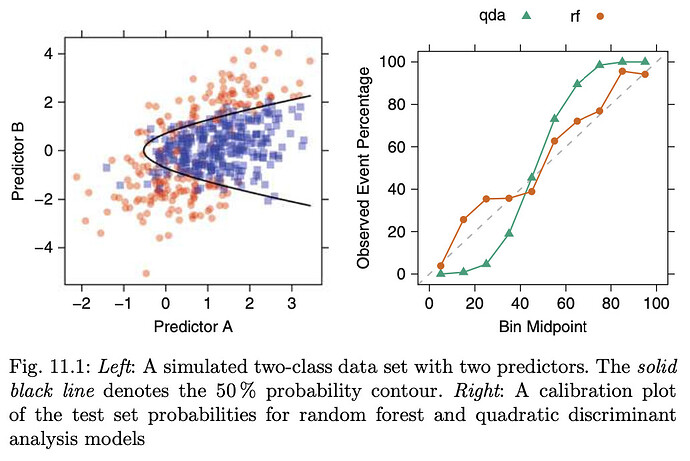

I read the two papers referenced and from what i can tell some models when they output probabilities, it can result in them pushing the probabilities closer to 1 and 0 than the rate of actual occurrences of a particular case. For example if i ran an algorithm for 100 instances the average score is around 0.55, but the actual fraction of positives is 50% which suggests a slight imbalance. So the scores are not well calibrated.

The probably function helps in this by allowing you to plot the rate of occurrence across the probability range and if its not close to a straight line, its problematic.

From my understanding of the topic, unbalanced probabilities would occur where you have unbalanced datasets, possibly regularization and over/under fitting? If unbalanced data is an issue, wouldn't upsampling/downsampling be a remedy when you are training within your resamples assuming the test set that you apply your model on remains unbalanced within the test fold of your resamples?

I understand my questions are not incredibly concise but if anyone could confirm my understanding that would really help

Thanks for your time