Hi, ![]()

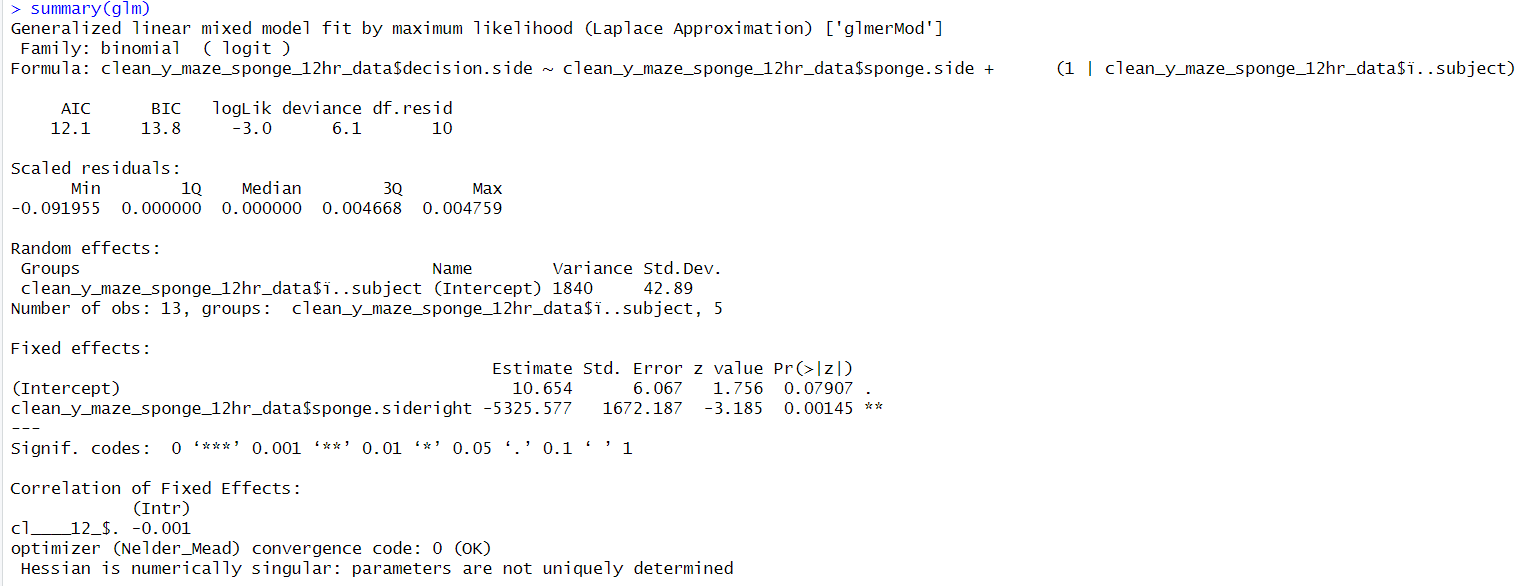

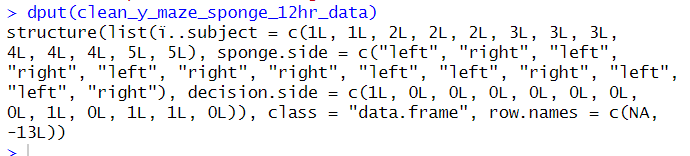

I'm needing some help interpreting the output of a binary logistic regression GLMM that accounts for individual subjects being used in 3 replicate trials each. My experiment is looking at whether sponge.side is a significant predictor of decision.side (whether a subject is attracted to the side a marine sponge is on in a Y maze experiment). I'm worried because the estimate is unusually large (see image below) and gives the output "Hessian is numerically singular: parameters are not uniquely determined". I'm wondering if this is occurring because my experiment is only made up of 15 trials total, with 5 subjects being used in 3 replicates each. I have attached the r code and image of the output below.

Help would be greatly appreciated! Thanks in advance ![]()

Load packages -- do this every time

library(lme4) # For lmer function

#Loading required package: Matrix

library(car) # For F-tests, likelihood ratio and Wald chi-squared tests

library(dplyr)

Read data into a data frame

y_maze_sponge_12hr_data <- read.csv("sponge_12hr.csv")

head(y_maze_sponge_12hr_data)

Sample sizes

table(y_maze_sponge_12hr_data$sponge.side)

#remove NAs

clean_y_maze_sponge_12hr_data <- y_maze_sponge_12hr_data %>%

filter(!is.na(decision.side))

table(clean_y_maze_sponge_12hr_data$sponge.side)

#frequency table

net = table(clean_y_maze_sponge_12hr_data$decision.side,clean_y_maze_sponge_12hr_data$sponge.side); net ###########

sponge.side = as.factor(clean_y_maze_sponge_12hr_data$sponge.side)

Fit a mixed model##############

glm = glmer(clean_y_maze_sponge_12hr_data$decision.side ~ clean_y_maze_sponge_12hr_data$sponge.side + (1 | clean_y_maze_sponge_12hr_data$ï..subject), family = binomial)

summary(glm)

#p val = 0.00145 ** (Accounting for repeated measures)