I was able to set up a connection using odbc and dbplyr:

con <- dbConnect(odbc::odbc(), "myHive")

After entering this code in the console a connections pane appears and I see many (many) connections. It's a list of our different schemas.

Most of the documentation out there gives an example of connecting to a table as

mytbl <- tbl(con, "some_table")

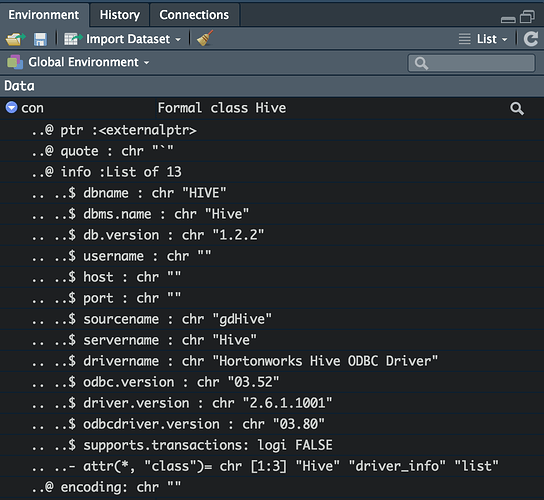

This will not work here since con is not defaulted to some particular schema (I think?). Here's a screen shot of con:

I found a post telling me that to select a particular table from a schema I can use in_schema:

mytbl <- tbl(con, in_schema("mydb", "mytable"))

A few things:

- The variable mytbl does not appear to be a tbl but instead a list of two. I expected this to be a tbl representation of the table mydb.mytable

- My understanding is that when creating the variable mytbl and then performing transformations such as select, filter, mutate etc. dbplyr will not actually pull all the data till I use collect() at the end. However, R is taking a very long time to run the mytbl definition. I wondered if my method of defining mytbl was not the intended means of doing so? How can I select a table from a schema and store in a tbl?